Game Development

now browsing by category

An introduction to ADX2 from CRI Middleware

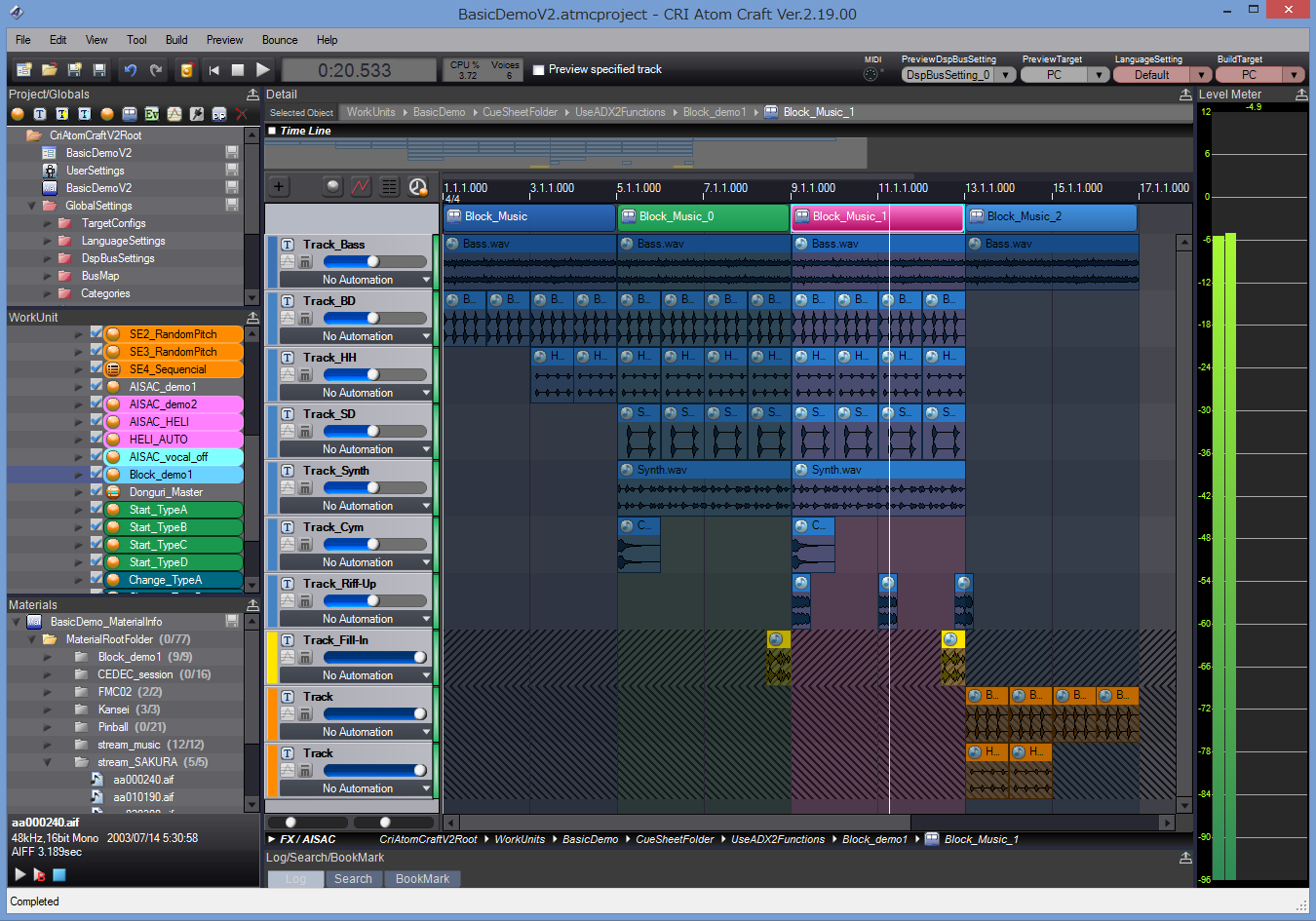

There is a new game audio middleware in town and it’s rocking hard! Except… it’s not really new, has already been used in more than 3000 games and offers all the features you would expect from such a tool, and some more: DAW-like interface with plenty of interactive functions, proprietary audio codecs for blazing-fast playback, an API which is super-easy to work with and to integrate into your game etc…

How is this possible I hear you ask? Discover ADX2 from CRI Middleware (@CRI_Middleware), one of the best kept secrets of the game audio industry and the de facto standard in Japan, now finally coming to the West.

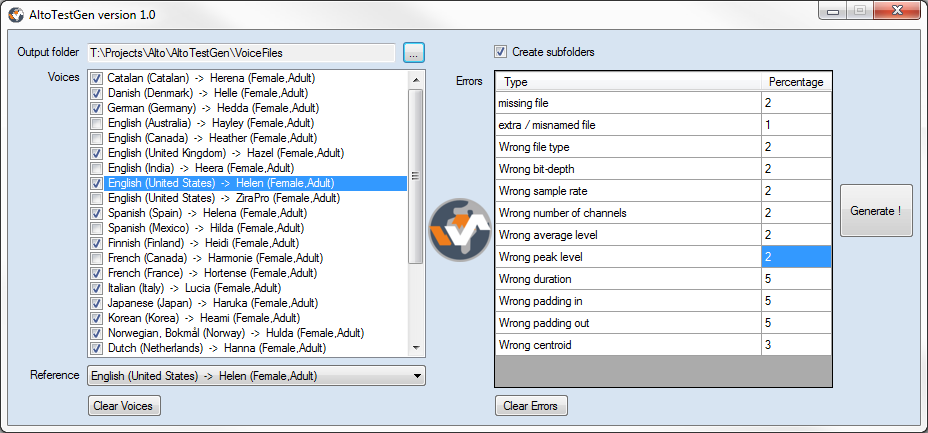

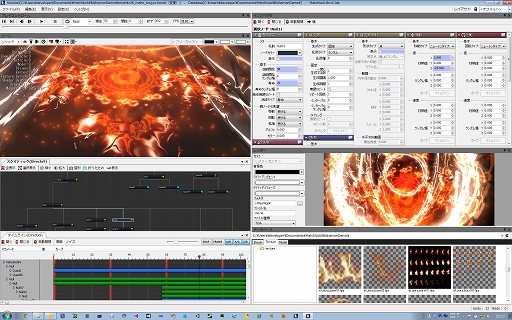

A DAW for your game audio

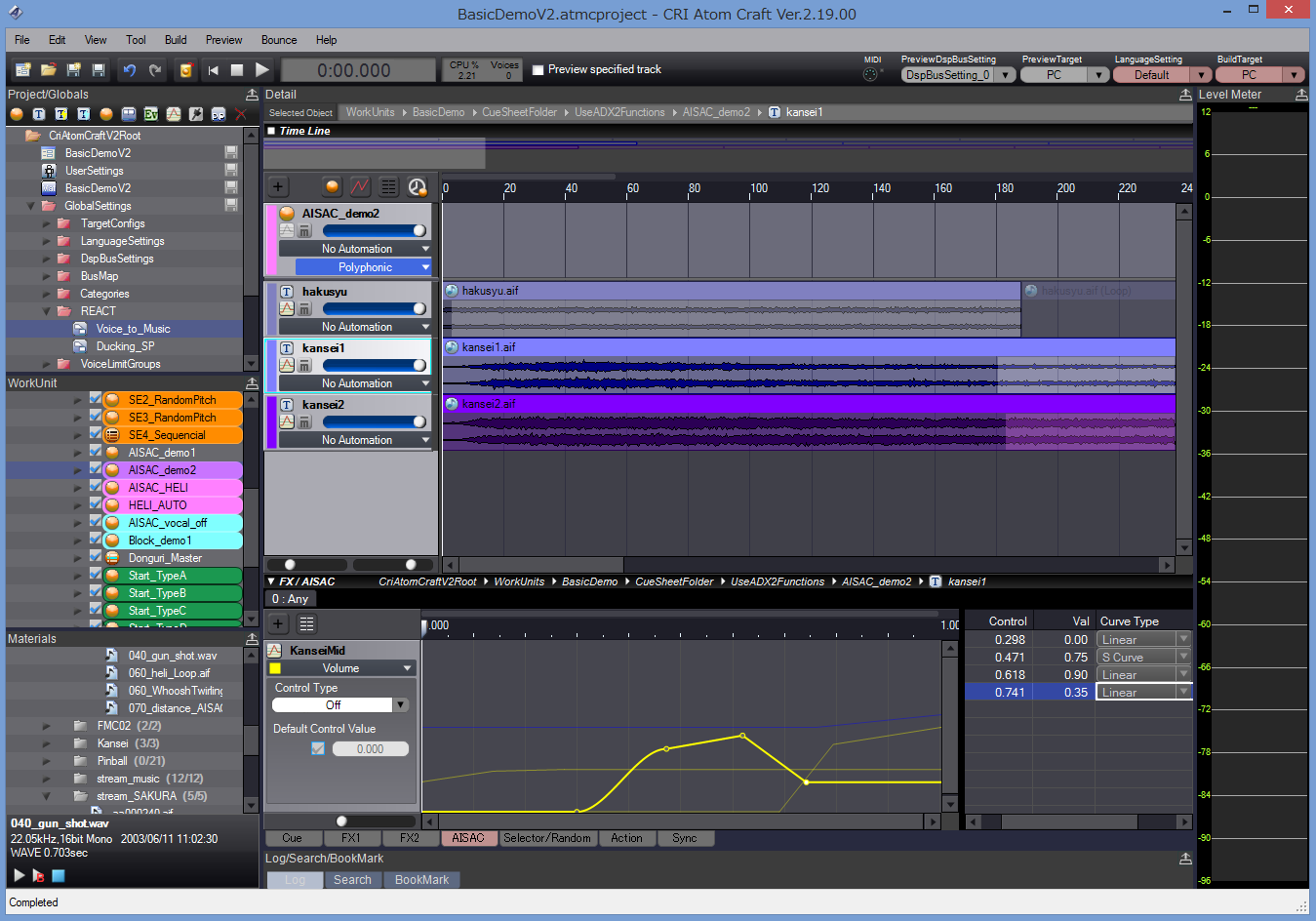

ADX2 is composed of an authoring tool (AtomCraft) and a run-time API. AtomCraft looks and feels like a usual DAW, one which would have been built for game audio. That’s probably why the learning curve is so gentle, compared to say something like Wwise. At the same time, when you really need some hardcore interactive features, ADX2 does not stay stuck in the DAW paradigm and will offer you the full power of a game audio middleware, but in very natural way, with arguably one of the best user interface available today for this type of tool.

In AtomCraft, you design Cues that will be triggered from the game and are organized in CueSheets (i.e. sound banks). A Cue is composed of one or more Tracks. Each Track can in turn have one or more waveforms placed on a timeline (they can have different encoding, filters etc…). The way these Tracks are played when a Cue is triggered depends on the Cue type. You will find a Cue type for all the usual behaviors (polyphonic, sequential, shuffle, random, switch etc…) and even more, like the Combo Sequential type, for example. This type of Cue directly implements a combo-style sound effect: as long as you keep triggering the Cue within a given time interval, it will play the next Track. If you miss that time window, it will come back to the first Track. So drop your samples, select that type of Cue and start your fighting game in a couple of clicks! Of course, you can nest Cues, call a Cue from another Cue etc… Actions can also be added to a Track timeline to insert events such parameter changes, loop markers (at the level of the Track) etc…

If Tracks are useful to create sound layers, the Cue timeline can also be divided vertically in blocks, which is particularly useful to jump from section to section based on the game context (for example when composing interactive music).

Speaking of the game context, to control the sound from the program RTPCs – both local and global – can be assigned to pretty much every parameter (including another RTPC) and they can have modulation and randomization features. RTPCs are called AISACs in the ADX2 vernacular.

To continue with the DAW analogy, automation curves, mixer and effects are of course also available.

Your idea into the game in no time

Everything in AtomCraft is always a click away. Basic tasks are ultra-fast, while complex interactive behaviors are a pleasure to design. Drop samples from the Windows Explorer onto a CueSheet and the samples will be added to the project as Materials, a Cue will be created with Tracks for the waveforms. You just have to press play! Need a random range on that parameter? Just click on the parameter slider and drag the mouse vertically instead of horizontally: here is your random range.

No need to open a randomizer window or to add a modulation parameter, no need to click many times, open or close windows, features are always there where you need them.

The API has been designed in the same way: uniform and coherent, very easy to learn and to use. Modules are configured, initialized and operated in the same way and API functions follow a clear naming scheme. ADX2 truly empowers both the audio designer and the audio programmer. Depending on the wishes or skillset of your audio team, you can decide to have the sound designers control the whole audio experience, to put the audio programmers fully in charge or a mix of both.

For example, as a sound designer, you could design an automatic ducking system in a few clicks with the REACT feature in AtomCraft (based on the categories the sounds belong too). You could also do it with envelopes or AISACs. The programmer, on his side, could trigger automatic fade-ins / fade-outs with the Fader module at run-time or he could program every single change by updating the volumes of the sound effects themselves or of their respective categories.

The run-time compares favorably with the competition in terms of speed, not in small parts due to CRI’s proprietary codecs (ADX, HCA and HCA-MX), which is especially handy for mobile development. This should not come as a surprise, since CRI Middleware was already assisting Sega Corporation in the early 90s with research into multi-streaming and sound compression technologies, even providing middleware for the Sega Saturn.

Easy integration

ADX2 is part of a middleware package called CRIWARE, which also includes Sofdec2, a video encoding and playback solution with many options, used for example on Destiny. It uses ADX2’s encoder for its audio tracks and share the same underlying file system (if you want to use it).

CRIWARE in general and ADX2 in particular are available on all common platforms from PC and consoles to handhelds and mobile devices.

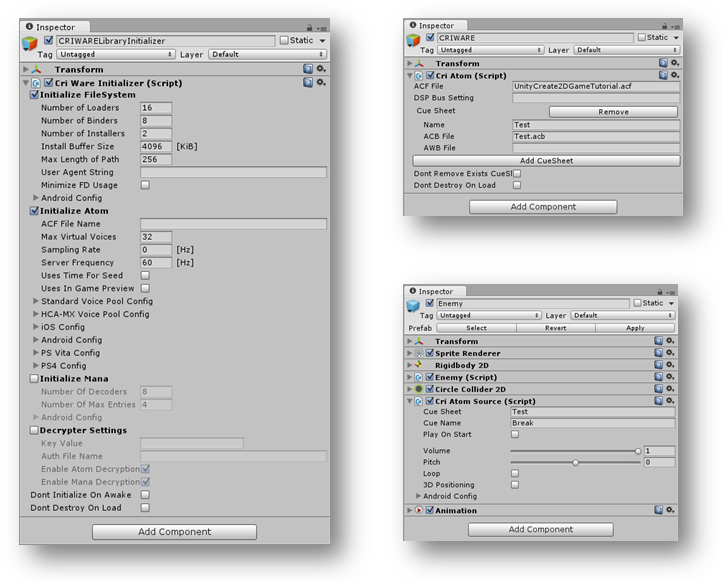

In addition CRIWARE is available as a plug-in for both Unity and Unreal, making it easy to add top-quality audio and video features to your games.

Should you need more information about ADX2, don’t hesitate to contact me; I have been working with it during the past year and have seen first-hand how it can help you implement interactive audio efficiently.

Even better, if you want to see it in action or try the tool by yourself, you can visit CRI Middleware’s booth at GDC 2016 (booth 442, South Hall) or follow their new Twitter account here: @CRI_Middleware for timely updates.

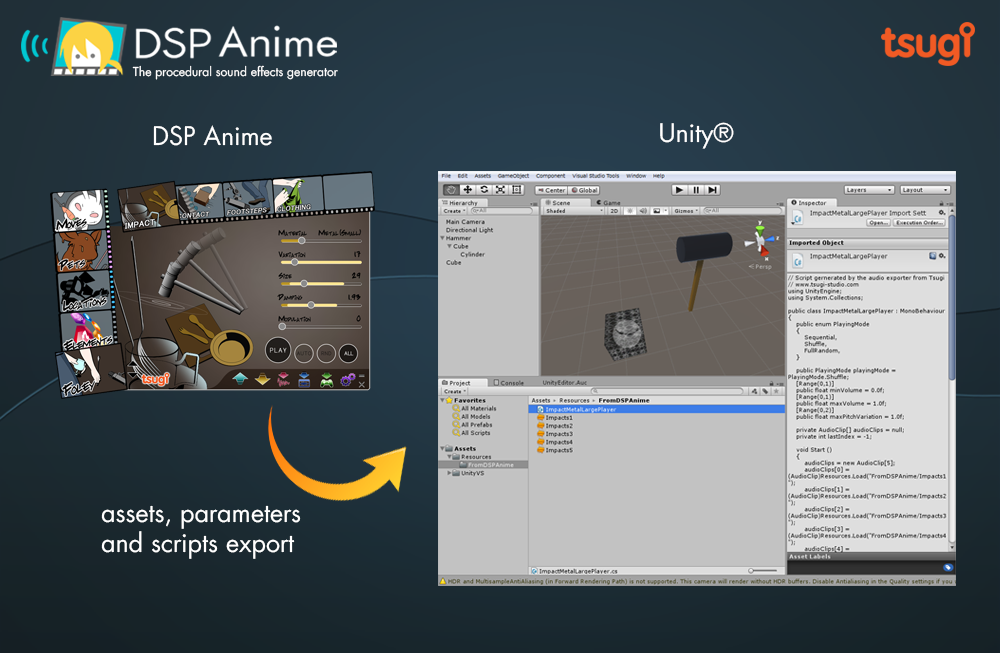

Automatically creating and exporting sounds to Unity

This post is about the Unity export feature in the DSP Anime sound effects generator from Tsugi. It can automatically generate several variations of a sound effect, add them to your Unity project, create the corresponding .meta files and write a script that will allow them to play sequentially or randomly, with or without volume and pitch randomization. All that in one click!

A sound effect generator like no other

DSP Anime is a sound effect creation software especially useful for indie game developers (although a lot of seasoned sound designers also use it to quickly generate raw material they can process later). It’s really as easy as 1-2-3:

1 – You select a category of sounds (for example “Elements”)

2 – You choose the sound model itself (for example “Water”)

3 – You adjust a few pertinent parameters (such as how bubbly the liquid is) to get exactly the sound you want.

That’s all! You can play and save the result as a wave file.

Because DSP Anime uses procedural audio models instead of samples, you are not stuck with a few pre-recorded sounds but can tweak your effects as you want or generate many variations of a same sound easily to avoid repetitiveness in the game.

Cherry on the cake, new categories are regularly released as free downloadable content. Although initially created for “Anime” sounds, the categories are now very varied. They include for example a model able to create thousands of realistic impact and contact sounds.

A couple of settings

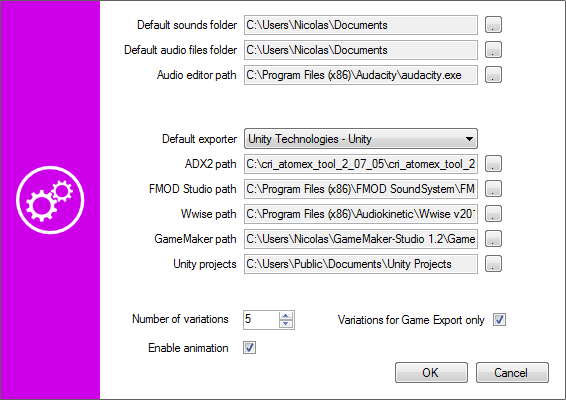

One really interesting feature of DSP Anime is its export to game audio middleware (such as ADX2, FMOD or Wwise). It will automatically create the events, containers, assign the proper settings and copy the generated wave files into the game audio project.

DSP Anime can also export towards game engines such as GameMaker Studio and Unity. We will now give a closer look to the later. First, make sure that the right exporter is selected in the settings window.

You will also notice that you can enter a number of variations. This is the number of sounds that will be automatically generated based on the parameters you set (and the random ranges you assigned to them) when you export to Unity.

Exporting to Unity

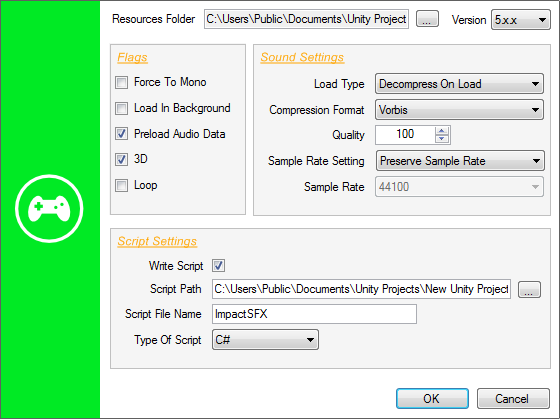

Once you have selected a sound and adjusted its parameters, click on the Export button, the following window will appear:

You will have to specify the Resources folder in which you want DSP Anime to export the sound files (which will become Unity audio assets). The corresponding .meta files will also be generated based on your sound settings. This folder must be located under the Assets\Resources folder of your Unity project. If you don’t have a Resources folder in your project yet, you will have to create one.

The Force to Mono, Load In Background and Preload Audio data flags correspond to the audio settings of the same name in Unity. They will be automatically assigned to the audio assets freshly created. The same thing goes for the Load Type, Compression Format, Quality, Sample Rate Setting, and Sample Rate parameters.

If you decide to generate a script in addition to just exporting the audio assets, you can choose the language (Javascript or C#). In that case, the 3D and Loop parameters determine if the sounds will be played as 2D or 3D sources and as one-shots or loops.

Checking your sounds in Unity

When you press the OK button of the Export window, the following happens:

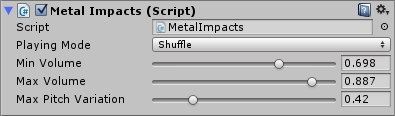

The following picture shows the script parameters as they appear in the inspector in Unity:

The script generated by DSP Anime should be used as a starting point and customized based on the actual requirements of your game. However, it already allows for some interesting sonic behaviors. For example, if you generated several sounds, it will be possible to specify how they will be selected during playback. Three modes are available: sequential, shuffle (i.e. random with no repetitions) and totally random. In addition, the playback volume and pitch can be randomized.

10 reasons to use Alto for Game Audio

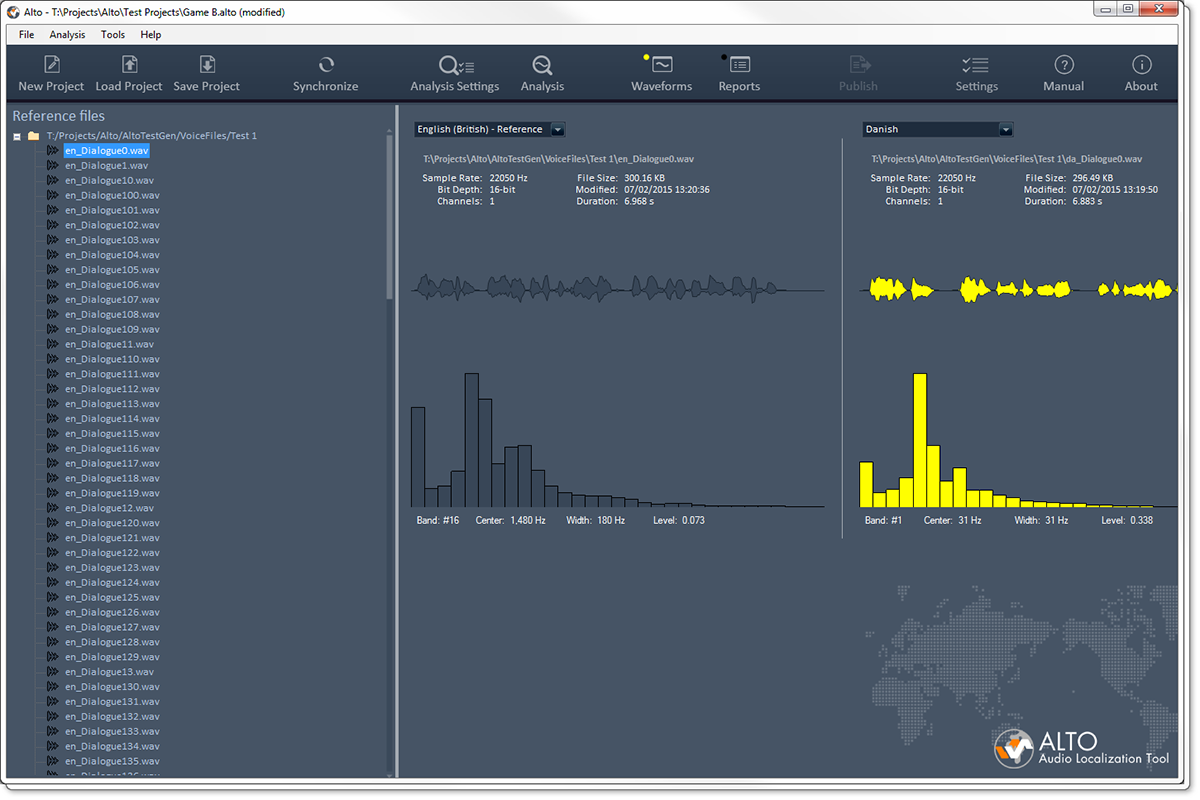

This post describes some of the unique features of Alto and explains why anybody working in game audio should use it!

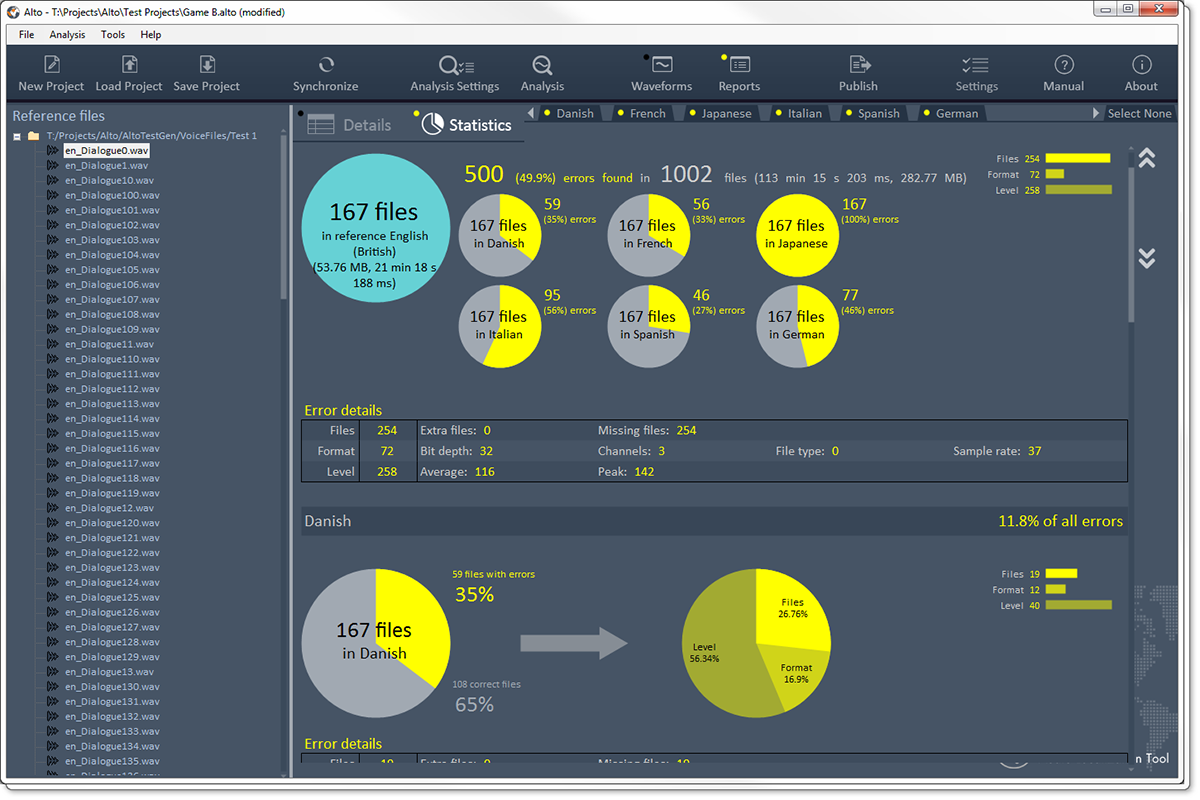

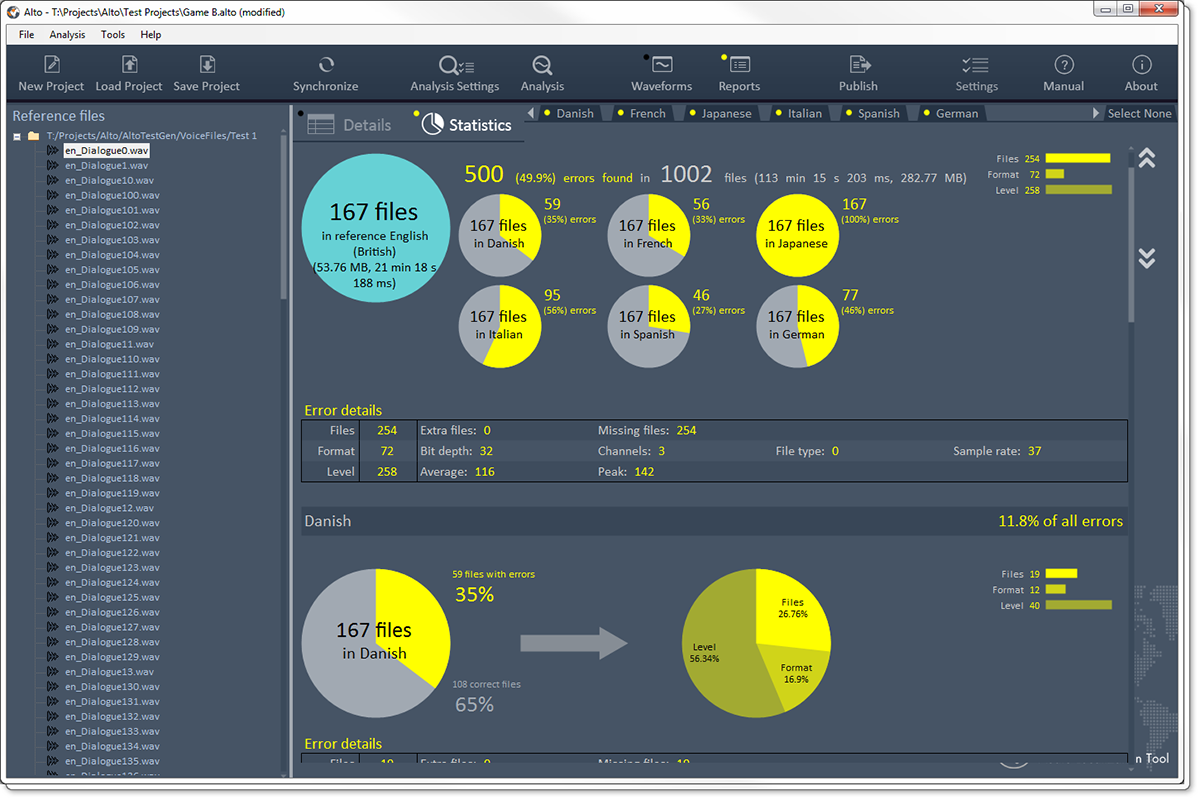

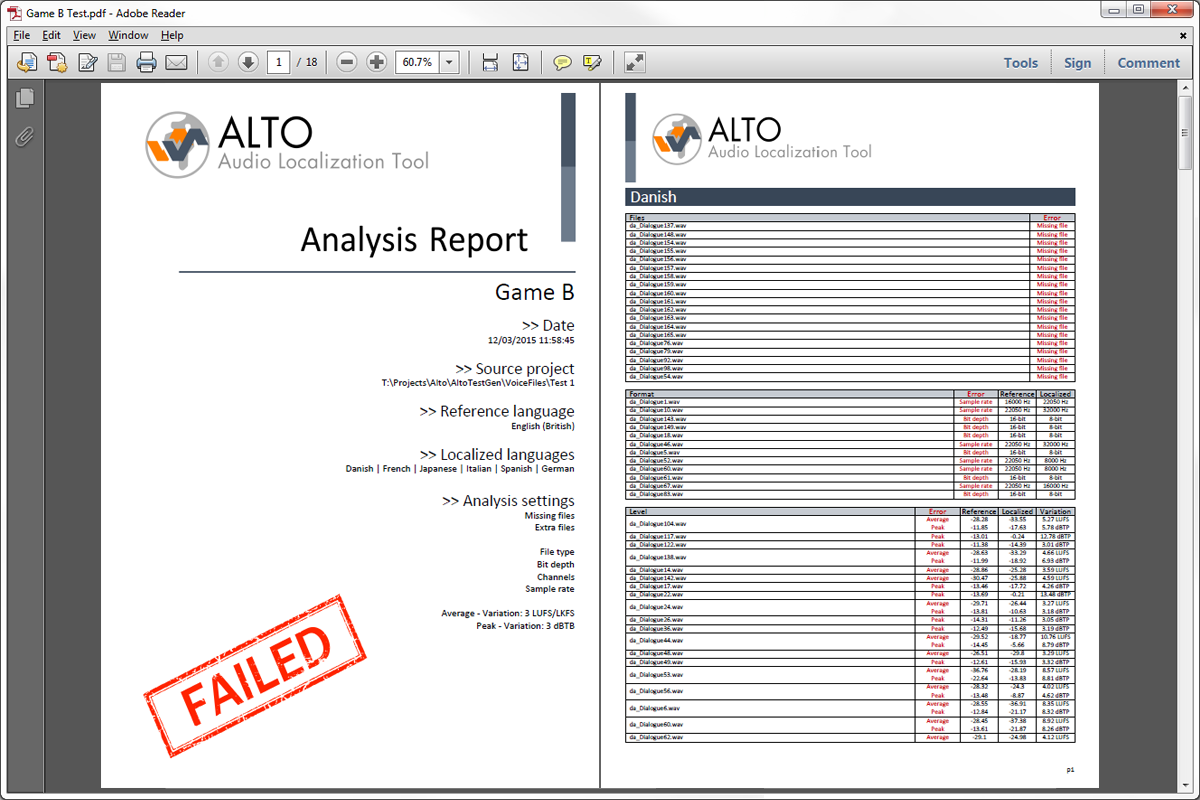

Tsugi just released Alto 2.0. What started as a tool for audio localization quickly became much, much more than that. For less than the price of some metering plug-ins, it will not only analyze and compare all your dialogue files in various localized languages (including loudness, silences, audio format and more) but it will also allow you to correct the files, rename them, test them in situation, generate placeholder dialogue etc…

In other words, it will probably save you a lot of time and money while making sure your deliverables stay at the highest quality standard. So here are 10 reasons you should use Alto if you are in working game audio:

1 – It will adapt to your workflow

Alto will work with any file naming convention or file hierarchy. As for the languages, it comes with useful presets for FIGS, ZPHR, CJK, PTB etc… but nothing prevents you from adding Klingon to the list if needed!

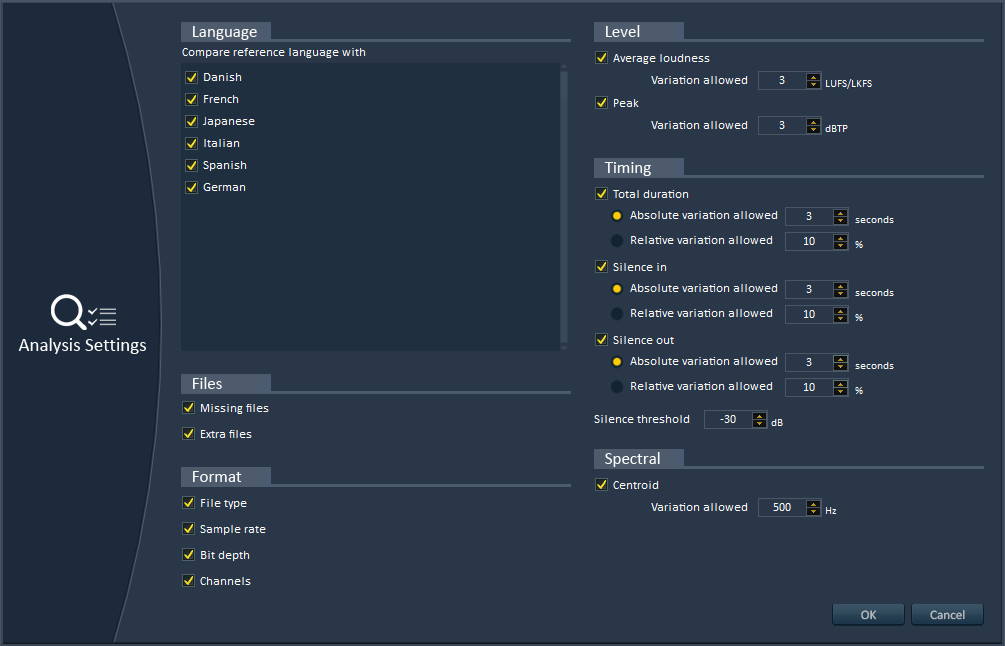

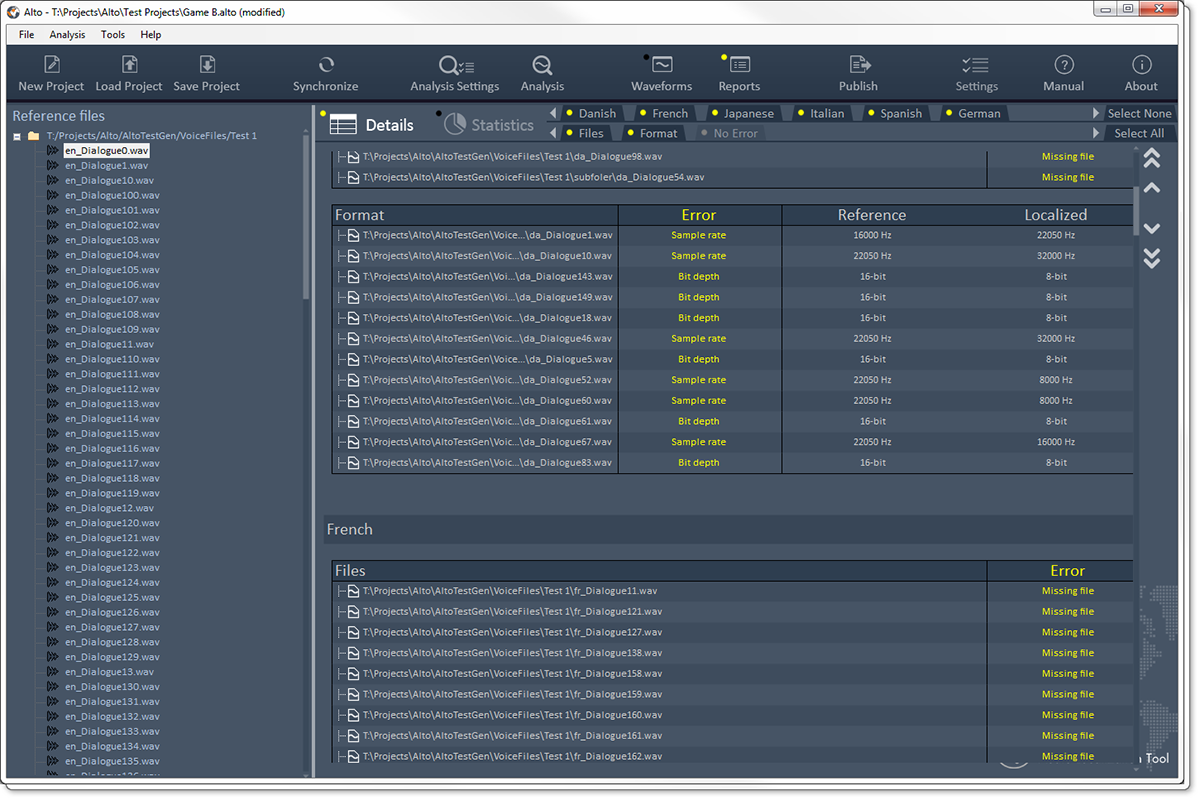

2 – Advanced analyses

Alto will analyze your localized dialogue files (or any sound files really) and find if anything is missing. It will also make sure that the audio format is correct (sample rate, bit-depth, number of channels). It will even check that the average and peak loudness, the duration, the leading and trailing silences are all within the margins of error you specified.

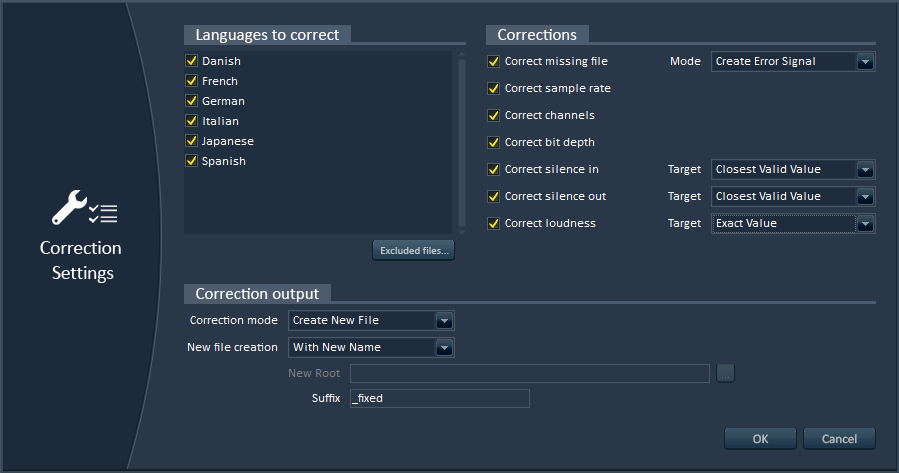

3 – Automatic correction of errors

Alto can correct the errors it found automatically, saving you previous time. You can still choose to ignore some files or some types of errors. You can also indicate if you want the correction to hit the exact value of the reference file or the nearest value in the valid range, in order to minimize the audio modifications.

4 – Awesome reports

Alto can generate exhaustive and great-looking reports for your team or your client. They can be exported in PDF, HTML or as Excel sheets, so you can adapt to whatever your interlocutor is using. They include all the errors found, statistics with pie charts per language and so on…

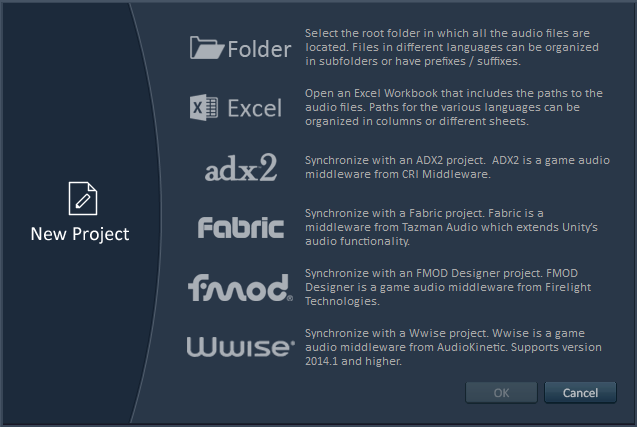

5 – Easy integration with your game audio middleware

Alto can import dialogue files from your ADX2, Fabric, FMOD or Wwise game audio project and synchronize with it. Know any other software that can do that?

6 – Easy integration with your own technology

Alto’s plug-in system allows you to interface with your proprietary tools and databases. Its command line version can be called from your build pipeline or from a third-party tool. It can also save reports in XML that can be easily read by other tools.

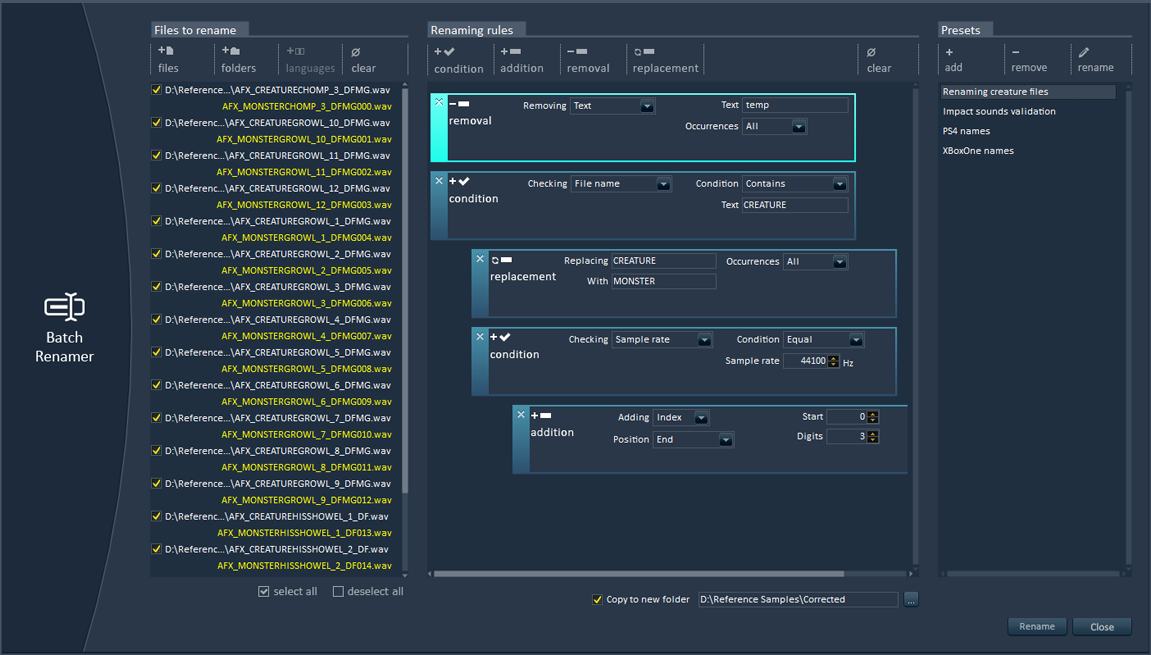

7 – Versatile Batch Renamer

Alto has an absolutely fantastic batch renamer (really, try it…). You don’t need to have an Alto project open: just drop some files, create a renaming preset (based on conditional, additive, removal and replacement rules), press rename and voila! The batch renamer can handle very complex scenarios very easily and can even rename files based on their audio characteristics. Fantastic, I told you ![]()

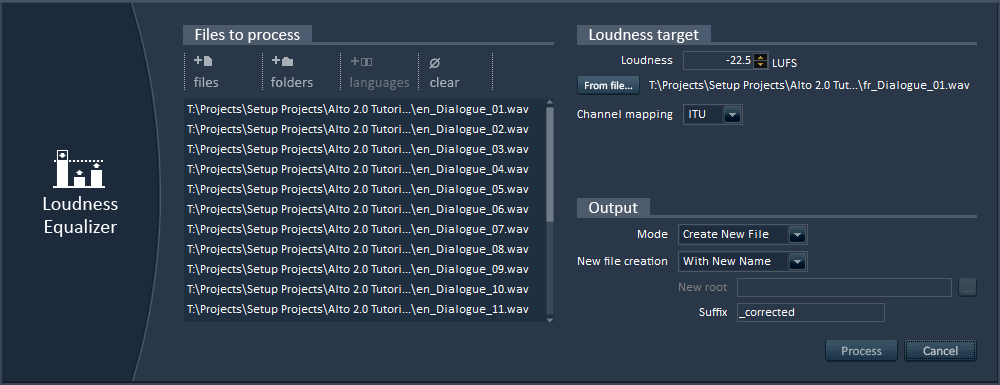

8 – Loudness Equalization

Again, you don’t even have to create an Alto project. Open Alto, open the loudness equalizer tool, drop your audio files (not necessarily dialogue) from the Windows Explorer, enter a target LUFS value or get it by analyzing a sound file, press Process and you are done! It’s that simple!

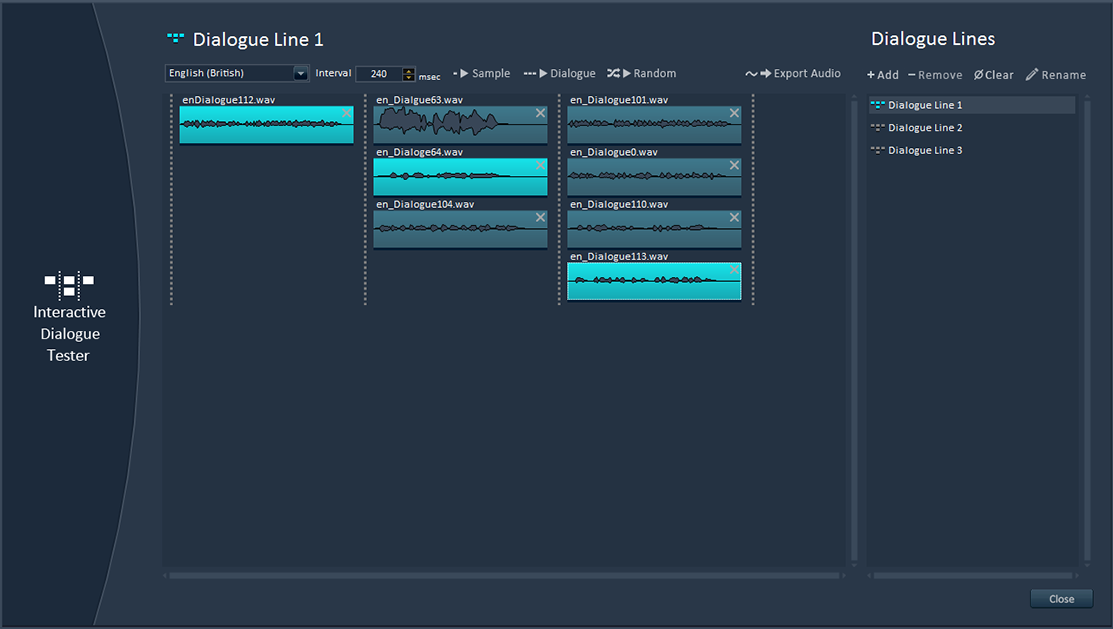

9 – Interactive dialogue tester

No need to fire up your game audio middleware or to ask an audio programmer: Alto comes with its own feature that allows you to test interactive dialogue, including randomization, gaps between sentences etc… You can check how concatenated lines will sound together in a few clicks, with a very user-friendly interface. You can even use it to test the sequencing of sound effects.

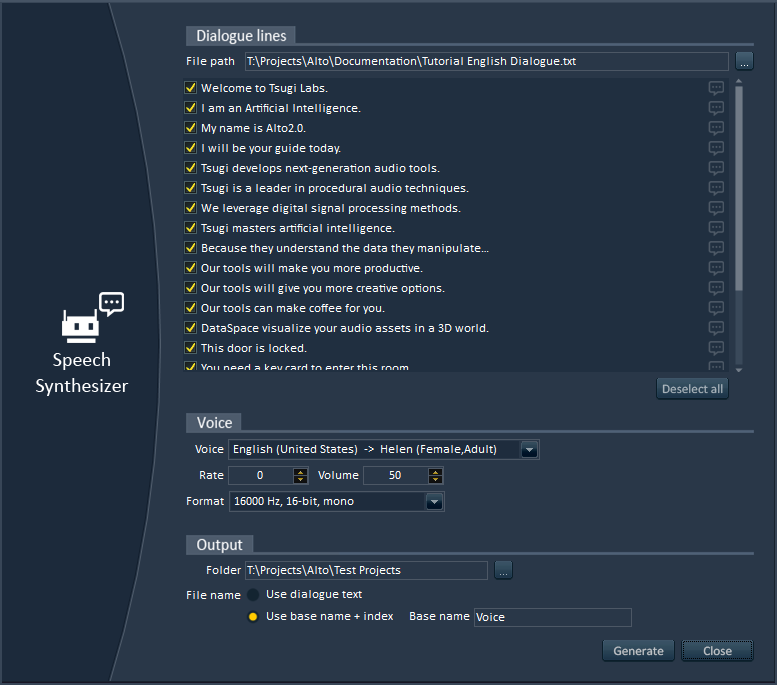

10 – Creation of placeholder dialogue

Thanks to Alto’s speech synthesizer, you can instantly create placeholder dialogue. Here too, no need to create a project. Start Alto, open the Speech Synthesizer tool, import the text or Excel file containing the lines to generate, select a voice in the right language (yep, not only in English!), and here you go, a wave file is generated for each dialogue line!

There are many other cool features in Alto that will make your life as a game audio professional a lot easier. But don’t take my word for it, download the free demo version and give it a try: http://www.tsugi-studio.com/?page_id=1923

Japanese Game Events

This post is about some of the game development events that happen in Japan.

With BitSummit and GTMF both happening this week, I thought it could be useful to talk a bit about some of the game events in Japan. If you are following the game industry, you probably heard about the Tokyo Game Show already, but there are a few lesser known events which are equally or even more interesting for game developers.

I’m only listing below the events I know either because Tsugi has been a sponsor, or I spoke there or at least I visited. There are probably other events. If you need more information about some of them, don’t hesitate to let me know and I will update this post.

Like for the previous post, I will put the links to the web sites in English (when they exist) but keep in mind that there is probably more information on the Japanese pages of the sites.

BitSummit

BitSummit is probably the indie game event with the most visibility outside of Japan. The organizers are well connected with the press – both Japanese and foreign – so you may have read about it already. The event is held in Kyoto which by itself is reason enough to visit! Local game companies such as Q-Games are heavily involved.

In addition to all the indie games and sponsor booths, the musical program is a strong point of BitSummit with many performances by famous game composers and chiptune artists. There is also an awards ceremony at the end of the show to recognize the best game, audio or visual design of the indie projects on the floor.

BitSummit is now in its third year and the organization has been passed to a newly formed group of game companies called JIGA (Japan Independent Games Aggregate) and Indie Mega Booth.

Digital Games Expo

Digital Games Expo – or Dejige – is a yearly indie-game event that happens in the famed Akihabara district in Tokyo. We participated last year and it was very well organized, with lots of interesting creators showing up.

There is a related event called Retro Digital Games Expo which is – you guessed it – dedicated to retro gaming.

http://digigame-expo.org/retro

Tokyo Indie Fest

Another event for indie game developers, Tokyo Indie Fest 2015 actually happened at the same spot than Dejige in Akihabara, i.e. the UDX Akiba Square and was therefore similar in size. However this is a 3-day event.

They have some pretty good information in English including some videos so I let you browse their web site:

http://tokyoindiefest.com/indexglobal.html

GTMF

The Game Tools and Middleware Forum (GTMF) is held in July in two times: first in Osaka and then a few days later in Tokyo. As its name suggests, it is the occasion for game middleware companies to present their products.

There is an exhibition floor and sponsored information sessions. The GTMF also facilitates meetings between middleware companies and their customers through their “MeetUps” system. The event is relatively modest in size and held in an hotel. It is free for visitors, so this is a good way to learn about the last game technology made – or used – in Japan.

Cedec

Cedec is the equivalent of the GDC (Game Developers Conference) in Japan. It is organized by CESA (Computer Entertainment Supplier’s Association) and held in the Pacifico conference center in Yokohama. Compared to the huge size of the GDC at the Moscone Convention Center in San Franciso, it is way smaller.

Nevertheless, it is “the” game developer conference in Japan with exhibitor floor and sessions covering the usual game development topics (I like how many of the audio sessions happen in room 303…). There is also an international track with foreign speakers but except for that all sessions are in Japanese. The Cedec Awards ceremony celebrates the best achievements of the year.

In addition to the “traditional” Cedec held in Yokohama at the end of August, there is also one in Sapporo (northern part of Japan, on Hokkaido) and since recently there is a Kyushu Cedec (western part of Japan).

http://cedec.cesa.or.jp/2015/index.html

http://kyushucedec.jp/

Tokyo Game Show

The Tokyo Game Show, or TGS, happens each year a few days after Cedec (i.e. in September) and is a mammoth of a show. Despite the name, it is not really in Tokyo but in Chiba (not far though), at the Makuhari Messe. There are business days and public days, the latter being very, very packed…

I won’t go into the details as you can find a lot of information on it online. It is worth noting for the game indie developers out there that last year TGS had an important indie corner (sponsored by Sony).

http://expo.nikkeibp.co.jp/tgs/2015/exhibition/english/

Indie Stream Fes

Indie Stream is a web site where indie game developers – but also publishers, middleware companies or media who have an interest in indie games – can have a page to present their products or services. They also have forums. Each year they organize the Indie Stream Fes at the Makuhari Messe, at the same time than the Tokyo Game Show, with their own awards and lightning talks by indie creators.

https://indie-stream.net/en/isf/isf2015

Regular events

This could be the topic of another post but of course, in addition to these yearly events, there are monthly – or at least more frequent – gatherings of game developers such as Tokyo Indies, Kyoto Indies etc… or meetings of the special interest groups of the IGDA’s Japanese branch, which are open to all.

Do you have any questions about game development in Japan? Let me know and I will write a post on it (well… if I’m qualified to do so ![]() )

)

Japanese Game Tools and Middleware

This post looks at a few Japanese game middleware companies which are not necessarily well known outside of Japan.

There was a time when you could joke that the only tool needed by the Japanese game industry was Excel. That was referring to both the lack of focus put on the development of nice tools and middleware (at least compared to the West) and the maestria demonstrated by Japanese developers when using Microsoft’s spreadsheet.

If the Japanese industry is still somewhat lagging behind in terms of tools and middleware in general, it has certainly made a lot of progress recently and we are starting to see some very nice tools emerge. I’m listing below a few Japanese game middleware companies I know of (mostly because I know people working there or I have had business with them). Although I’m indicating links to their English pages (when they exist), note that the Japanese pages may provide more information.

CRI Middleware

The modern history of CRI Middleware started in 2001 but before that they were known as CSK Research Institute (CRI) and did some research for Sega in the 90s, mostly on audio and video encoding. Now, their products are regrouped under the CRIWARE umbrella and include ADX2, Sofdec2 and File Magic PRO.

ADX2 is the leading game audio middleware in Japan with more than 2800 games using it. It has all the features you would expect from a game audio middleware and then some. Cherry on the cake, it’s quite easy to use for both sound designers and programmers alike. There is also a free ADX2 LE version available for game indie developers. Sofdec is a movie encoder / decoder (it was recently used on Destiny for example). File Magic PRO – as you probably guessed – is a file / packaging system. There is also a CRIWARE Unity plug-in including all of the above as a package for Unity.

http://www.cri-mw.com/index.html

Silicon Studio

Silicon Studio may be one of the best known Japanese middleware providers in the West as they have been exhibiting at GDC. They are focusing on both full-fledged engines (like Orochi or Paradox, a new open-source C# game framework), and graphics engines (like Mizuchi, a real-time rendering engine and Yebis, a middleware for advanced optical effects used by Final Fantasy XV). By the way, if you are wondering where all these names came from (well, except for Paradox), it’s from Japanese mythology.

As a side note, since I regularly receive emails about people wanting to work in the game industry in Japan, it is worth mentioning that Silicon Studio have a lot of foreigners on staff so that’s probably one of your best bets if you want to work in game technology in Japan.

http://www.siliconstudio.co.jp/en

Matchlock

Matchlock are somewhat related to Silicon Studio, they have the same CEO. Their product is Bishamon, a middleware specialized in particle effects. Unsurprisingly, Bishamon also comes from the name of a Buddhist deity.

http://www.matchlock.co.jp/english/

Web Technology

Don’t get fooled by their name, WebTechnology are mostly building graphic tools and technologies. One of them, relatively popular among indie game developers is OPTiX SpriteStudio which allows you to create 2D animations for your games.

Actually, at Tsugi we did a special version of DSP Anime that works with SpriteStudio:

http://www.tsugi-studio.com/?page_id=1749

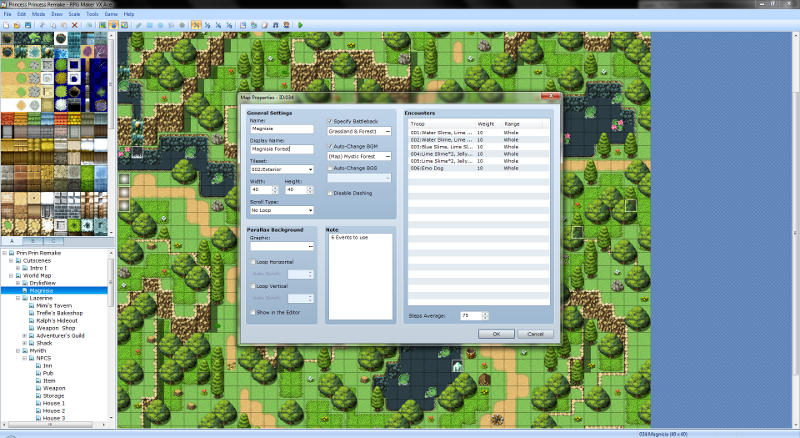

Enterbrain

Although Enterbrain is probably more known as a publisher of game-related magazines such as Famitsu, they are also publishing tools to build games, the most famous being RPG Maker (the latest version is RPG Maker VX Ace).

MonoBit

I don’t know them very well, expect for the fact that one of our former interns is now working there :-). They are making a game engine.

Tsugi

I would not be a good founder if I didn’t talk about our little venture, Tsugi. Based in Niigata, we are developing sound tools and middleware for the game industry, putting the emphasis on workflow efficiency and creative options.

Professional software includes Alto, an audio localization tool, AudioBot, an audio batch processor and QuickAudio, a sound extension for Windows Explorer. GameSynth is our upcoming procedural audio middleware. We also have two sound effects generators for indie game developers, DSP Anime and DSP Retro, which enjoy a nice notoriety in Japan.

Do you have any questions about Japanese tools and middleware? Don’t hesitate to ask! I will try to update this post.

Generating thousands of dialogue files

This post describes how we generated thousands of voice files to test Alto, the new audio localization tool released by Tsugi.

So many files, so little time

With the explosion of content in AAA games, it is not rare to end up having thousands or even tens of thousands of dialog lines in a project once they are all translated. Some lines may be recorded internally, others some by external studios or localization agencies. But how do you manage quality control? Comparing all these dialog lines manually would be cumbersome, error-prone, and actually pretty much impossible without an army of little elves with multilingual skills.

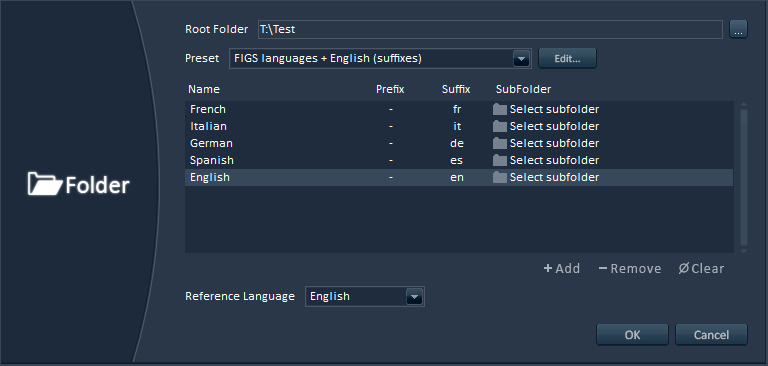

Enter Alto. Alto is an audio localization tool (see how clever?) that compares these thousands of dialogue lines in several languages automatically, finding missing or misnamed files, checking their audio format (sample rate, channels and bit depth), their duration (including leading and trailing silence), their levels (average and peak) or even their timbre. Alto can get the data directly from your folders (with files organized in subfolders, having prefixes or suffixes…) or by reading the files referenced by an Excel sheet or even by importing a game audio middleware project (ADX2, FMOD and Wwise are all supported). Here are a couple of screenshots:

|

|

|

|

Once the analysis done, you can give a nice PDF / Excel / HTML report to your manager or client, showing the quality of you work. Or go back to check all the errors ![]() You can also get an XML file so third party tools could read it and automatically mark dialogue as final or placeholder for example.

You can also get an XML file so third party tools could read it and automatically mark dialogue as final or placeholder for example.

It’s incredible the number of small – and sometimes bigger- errors the studios who beta-tested Alto have found in their older projects. That does not mean their work was bad, but simply that it is nearly impossible to get perfect localized dialogue in each language on big projects without some kind of automated process to test everything.

Computer polyglotism

Testing Alto meant that we needed thousands of audio files in many languages. This kind of data is quite hard to get. Asking files from old projects to some of our clients would have taken time due to IP concerns. Of course, we planned to have beta-testing with game studios, but we at least needed something to help us develop the program, before it even reached the beta-testing stage.

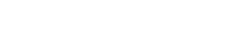

It’s not that uncommon in the game industry to generate placeholder dialogue lines with a speech synthesizer. So I decided to do the same with the test files and to write an application which would create all these files for us.

This application takes a text file as input. It starts by segmenting it into sentences based on the punctuation marks. Then I use the Microsoft Speech Synthesizer to generate the audio files for the reference language. The next step is to send the sentences to the Google Translate API to be translated in order to synthesize them in other languages. It turns out that the Google Translate API is not free anymore (alternatives are not free either or of lesser quality). Even for a few files, you need to register and enter your credit card information, you need an API key etc… However, Google’s interactive translation web page still makes callbacks using public access without an API key. By giving a look to these AJAX calls and sending them directly from code it is possible to get your translation done (you just need to parse out the JSON returned).

Since we are sending the text sentence by sentence and not as a big chunk of text, we don’t have a problem with the size limit imposed by Google’s translation web page. Of course this also means that we make many more calls, which could be a problem speed-wise. Fortunately, it ends up being very fast. Having now translated the text in various languages, I sent it to the corresponding voices I downloaded for the Microsoft Speech Synthesizer. You can find 27 of them here! (On that topic, make sure that the voices you are downloading are for the version of the synthesizer you have installed).

Purposedly wrong

Finally, I needed to introduce some errors to make sure Alto was doing its job correctly. The Microsoft Speech API allows us to choose different types of voices as well as different rates and volumes of speech, which was an easy starting point to add some variations. In addition, the program re-opens some of the files generated and saves them in a different format, with a different number of channels, sample rate or bit-depth. Some files are also renamed or deleted to simulate missing and misnamed files. I used some existing Tsugi libraries to add some simple audio processing to stretch the sounds, add silence at the beginning or at the end, as well as to insert random peaks. To test the spectral centroid, the application also boasts a few filters.

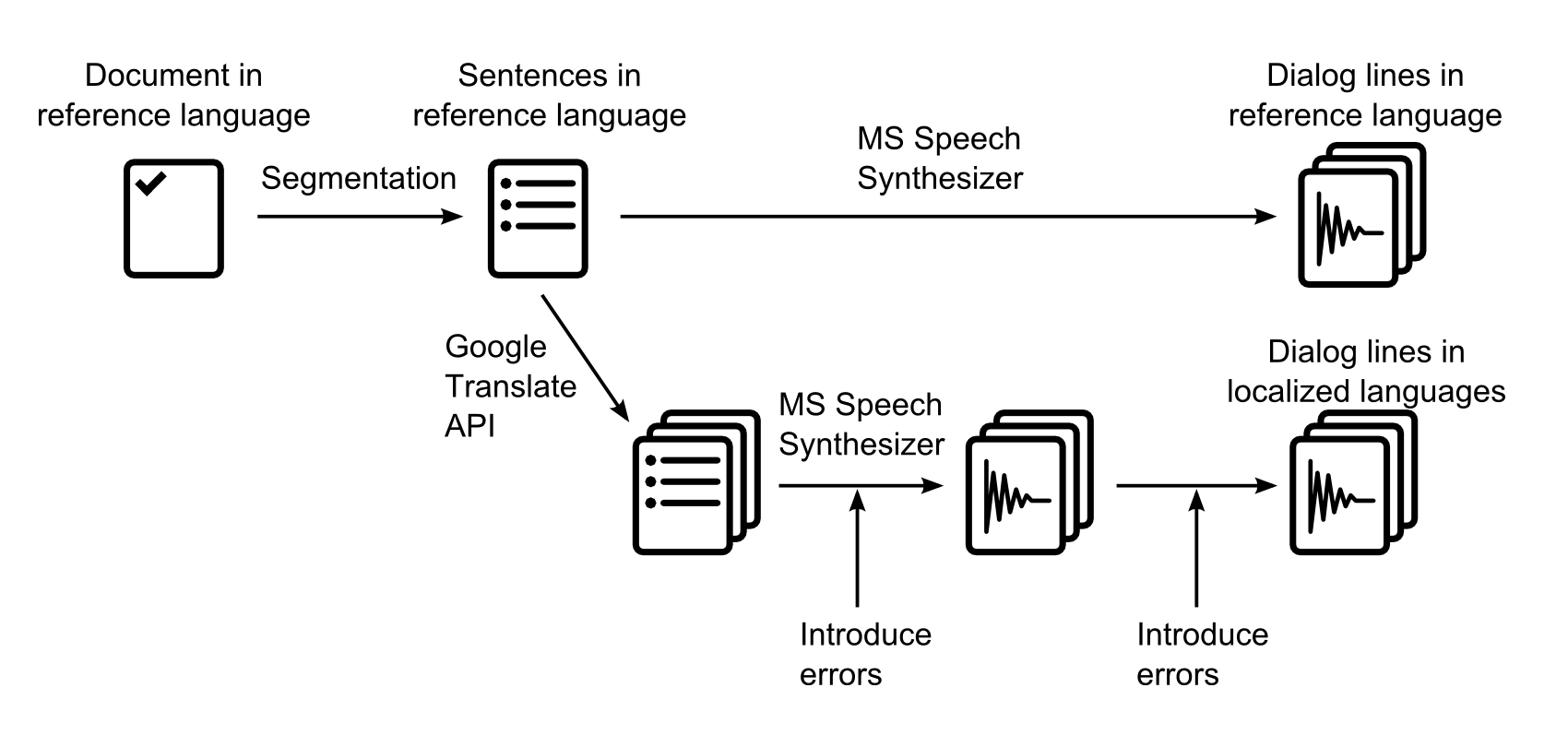

The user interface of our little tool allows us to select the voices (and therefore the corresponding languages) we want to generate dialogue for, as well as a percentage for each type of error we want to insert. Playing with the percentages allows us to test various cases from one or two tiny mistakes lost in thousands of files to all files having multiple errors. The program also generates a log file so we can see which errors were added to which files and check that they are actually detected in Alto.

Here is a picture of AltoTestGen:

Not very pretty but it did the job! All in all, after just a couple of hours of programming, we had thousands of lines in about 20 languages and could generate thousands of new ones in a matter of minutes. Although the voice acting is definitely not AAA ![]() , these files are easy to recognize and perfect for our tests: mission accomplished!

, these files are easy to recognize and perfect for our tests: mission accomplished!